The web is one big collection of data that’s increasing by the day. How can you use all of that valuable information in your own applications? You could resort to one of the many publicly available datasets, or you could create your own resource by scraping the web for data. In this blog post, we’ll look at Python web scraping: how it’s done, when it’s okay and when you probably shouldn’t do it.

What is Web Scraping?

Often, the only way to discover patterns in data is to examine lots of it. So instead of reading one web page, you might want to collect thousands of samples from a domain. Sure, you could do it manually, by copying and pasting text from websites of interest into your local spreadsheet. But the smarter, more scalable way is to learn to write a program that does all that for you.

Such a program would typically combine a web crawler and a web scraper. The crawler is responsible for finding content of interest (e.g., by going through an alphabetically ordered list of URLs), whereas the scraper extracts valuable information (sans boilerplate code) from an HTML document.

At a high level, crawling and scraping are exactly what Google and other search engines do when they index the web’s pages in their searchable catalogs. However, this article is about how you as an individual can use web scraping with Python in your own applications.

Is Web Scraping Legal?

Let’s be real: Most companies probably don’t want you to collect all their data without paying them a single penny. However, as far as the law is concerned web scraping appears to be in a gray area. In 2012, a hacker was given jail time for scraping users’ email addresses from AT&T — even though he and his partner were only able to do so because of a security hole in the company’s website. It should be noted that the hacker’s guilty verdict was eventually overturned, albeit on procedural grounds.

There are a few rules of thumb to follow when scraping data from websites. First, look out for a site’s robots.txt file that spells out the robots exclusion standard for web-crawling bots. Found at the root of a web page, it lists the pages that the site owners don’t want you to crawl. For example, check out Facebook’s robots.txt for a very extensive list. Even if a page is not listed in the robots.txt but deals with sensitive data like personal information, we would definitely steer clear.

Second, one big problem caused by web crawlers can be overloading — and eventually crashing — a site’s server. This is something you’ll absolutely want to avoid because it may be interpreted as a malicious attack. If you overload a company’s servers, they’ll put you on a list and block your IP from future access to their site. So pace your requests accordingly.

Finally, web scraping isn’t the only way to collect a website’s data. Many companies offer APIs through which you can obtain their data in an organized and authorized manner. Granted, they’ll control what kind of information you get through their API — but at least with this way you can be sure they do not actually mind you having it. As a corollary, we would generally avoid companies that have a history of going after people who’ve tried to scrape their content.

What Can Web Scraping Be Used For?

One of the most successful tech projects of 2020 was Avi Schiffmann’s tracker of Coronavirus cases around the world. How did a 17-year-old high school student build such an important resource that has attracted over 1.5 billion visitors to date? Put simply, he wrote tons of web scrapers that collected Covid-19 numbers from government resources and then aggregated all that data in one central place.

Schiffmann’s case shows how oftentimes, an innovative product is not just about inventing something from scratch. It can be as simple as combining two or more different resources to paint a much bigger picture. Search online for a collection of other data science projects powered by scraped data, and you’ll find tools that predict stock prices to ones that analyze job and housing markets.

How Does Python Web Scraping Work?

Python is great for web scraping. Let’s look at some handy Python tools for web scraping that you might want to use. Typically, you’d want one library to open a web page for you, and another one to parse it.

Open HTTP

So how do you access web content without using a browser? Simply use a library that can handle the hypertext transfer protocol (HTTP) for you. Python has several such libraries, but the most recent (and easiest to use) is requests. It’s built on top of the urllib3 library and makes handling your HTTP requests a walk in the park.

Parse HTML

A requests call returns a string object full of HTML tags. If you’re not familiar with the hypertext markup language, this can look confusing or even intimidating at first. Fear not! As usual, Python has your back.

The most popular package for parsing your HTML string is BeautifulSoup. Named after both the Turtle Soup song from Alice in Wonderland and the notorious “tag soup” that you get when web pages don’t use clean HTML, BeautifulSoup provides simple and easy-to-use commands to extract relevant information from HTML documents.

There are many more packages that do something similar, such as boilerpipe and newspaper3k (designed explicitly for scraping newspaper articles). In addition, you can write your own regular expressions to parse HTML strings. Regular expressions, or regexes, are string search patterns that make for powerful tools in processing written language. Python offers the re package as part of its standard library.

A Python Web Scraping Example

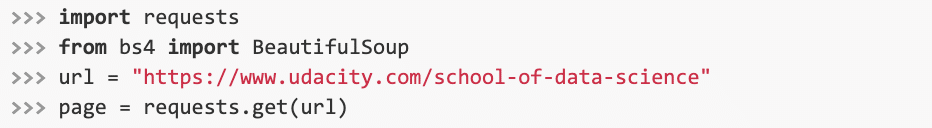

Let’s say that we’re interested in Udacity’s “School of Data Science” course programs. We want to know what Nanodegrees are available as well as their start dates. How would we go about finding this information? First of all, we import our libraries and load our URL:

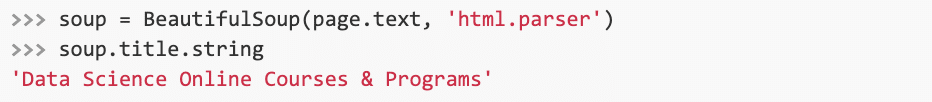

We then make our “soup” with BeautifulSoup and extract the site’s title.

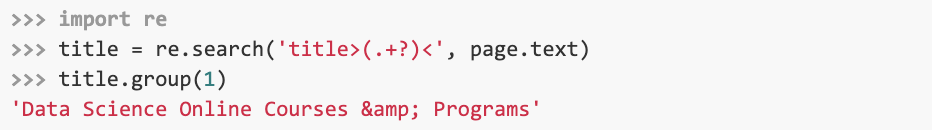

As you can see, BeautifulSoup provides handy shortcuts for extracting information from an HTML string. We could just as well write our own regexes to obtain that information. But that solution can be quite tedious, especially if we’re dealing with an HTML “soup.” Using the website’s “View Page Source” option, we’d have to look through the source text to know exactly which pattern we’re looking for. Once we’ve accomplished that, we can write our regex:

If you’re not sure how to read that regular expression and want to learn more, take a look at these two cool regex resources: an interactive tutorial for learning regex patterns, and an online tester to try out your regexes.

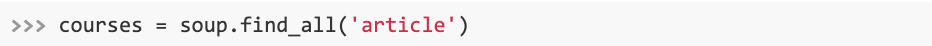

Back to our beautiful soup. Let’s say we know that all our courses on the Udacity course page are tagged as “articles.” With that knowledge, we can easily extract them:

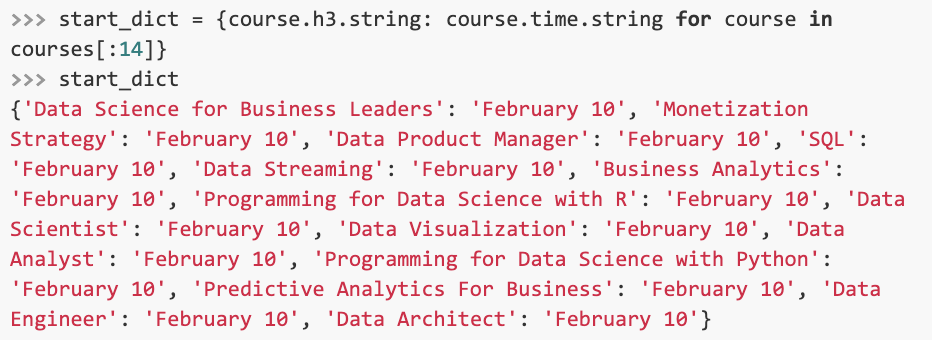

We’ve now split our soup into many small bowls. For each of these, we then extract the start date, which we store in a dictionary for easy retrieval. We only use the first 13 items stored in our “courses” variable because that’s the number of courses shown on the page.

Alright, they all start on the same date; nothing too exciting 🙂 But maybe you can find some more interesting information on your own, using the tools we’ve just introduced. Try looking into the courses’ levels!

The Next Level

Obtaining data via Python web scraping is just one — albeit, important — step in the programming pipeline. Enroll in our expert-taught Introduction to Programming Nanodegree to master all the phases of a coding project, from scraping and analyzing data to visualizing it on a webpage.