More than three decades following Ernő Rubik’s invention of the Rubik’s Cube in 1974, a group of mathematicians and computer scientists gathered to study the properties of the cube using a cluster of Google supercomputers. They wanted to determine what Rubik’s Cube enthusiasts (cubers) call “God’s number” — the smallest number of moves required to solve the cube from any starting configuration.

Their research provides lessons valuable to both cubers and computer programmers.

What Are PLL Algorithms?

The cubing community had long suspected God’s Number to fall somewhere in the 1920s, before it was proven to be exactly 20 in 2010. Over the years, cubers have developed a number of cube-solving methods, each varying in complexity and number of moves required. Permutation of the Last Layer (PLL) is a collection of Rubik’s Cube algorithms that describe sequences of rotations for advancing the cube towards its solved state.

Rubik’s Cube algorithms work by splitting the cube into smaller units whose solutions are combined to transform the randomized cube into the solved configuration. The simplest approach splits the cube into bottom, middle, and top layers. Solving each successive layer becomes more difficult since the arrangements of the previous layers need to be kept in place. PLL algorithms allow the solver to isolate and work on the cube’s final layer, without affecting the other layers.

Rubik’s Cube and Computer Programming

Computer programming and cube solving require similar problem-solving skills, namely algorithmic thinking and pattern recognition. The following are some lessons a novice programmer can learn from Rubik’s Cube masters.

Breaking Down the Problem

Separating a Rubik’s Cube into layers and solving each layer individually reduces the overall complexity since it’s much easier to solve a single layer than the whole cube. PLL algorithms employ a similar method for solving the last layer – they split the task into sequences of subtasks, such as swappings and permutations, which ultimately form the solution to the cube. Likewise, fundamental programming problems, such as sorting and searching, are solved by splitting the input into smaller, more manageable parts.

This is a well-known algorithmic paradigm known as the divide-and-conquer method. The method works by breaking down a problem into subproblems of the same type, each of which can be further broken down until being directly solvable. The solution to the original problem is reached by connecting the dots and reassembling the solutions to the subproblems.

Similarly, computer programs are built by separating the program into small, independent units called functions. Combined, functions perform everything required for the program’s execution, but individually, they only perform one part of the program’s functionality.

Functions make the program modular, which not only conceptually simplifies the problem, but also the writing of code. It makes testing straightforward since small functions have fewer potential errors and edge cases. And if something goes wrong in the execution of the program’s logic, modularity allows us to isolate the parts that contain bugs, without our having to inspect each line to find the error.

Pattern Recognition and Abstractions

Pattern recognition allows you to find and exploit similarities between a solved and an unsolved problem. For instance, PLL algorithms for solving a 3x3x3 Rubik’s Cube also apply to a 4x4x4 cube, though the latter comes with an additional complication of parity. Rather than starting from scratch, you can start solving a 4x4x4 cube similar to how you’d work on a 3x3x3 cube, and then you’d only have to find a solution to the new, unsolved problem.

To put this into perspective, imagine you’re given a list of elements and your task is to find the optimal subsequence within that list, such that the subsequence satisfies some condition. A straightforward solution is to iterate over the elements in the list and enumerate all possible sequences, and then check which satisfy the condition – a method also known as “brute force.” However, with experience, you’ll be able to recognize that this problem class usually requires the sliding-window technique.

Abstracting away the specifics of implementation and thinking about solutions in terms of techniques rather than programming syntax allows you to intelligently and efficiently reason about solving tactics.

Programming languages are ultimately layers of abstraction on top of machine code. The code from high-level programming languages is translated into machine code, which then performs fundamental operations, such as storing and loading numbers into memory or adding them together. It’s tough to see how such simple operations can in tandem carry out more complex tasks, but they underlie all computer tools.

The first piece of code most programmers write is a “Hello, world!” print statement. But even many experienced programmers will have a vague understanding of how that function works under the hood. And they don’t need to, because by abstracting away the details, programming languages allow programmers to be more efficient by focusing on high-level problem-solving. This is why abstraction is at the essence of computer science.

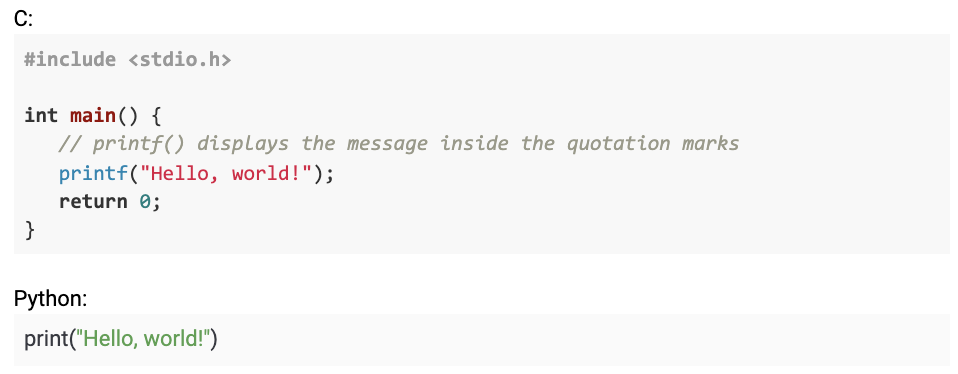

Let’s take a look at two code examples that print “Hello, world!” in both C and Python.

Although both Python and C are high-level programming languages, Python has a higher level of abstraction which makes its code concise. However, the additional layers of abstraction come at the cost of computation speed, which is why some applications still require low-level programming languages. They are more tedious and complex to write, but the trade-off is that the programmer will have greater control.

Complexity

In theory, brute force is a fail-safe approach, but in practice, it’s highly inefficient because it scales poorly as search spaces increase. Given that we can choose from 18 different twists per move, if we were to use brute force to find a sequence of 20 twists to advance the cube to the solved position, the number of possible sequences would be 1820, or 12 septillions. It’s easy to see why cubers came up with more sophisticated solving techniques.

Strategies for solving the Rubik’s Cube include the Screwdriver Method, the “Bottom-up” Method and the CFOP Method, which includes the aforementioned PLL algorithms. Whereas they’ll all result in solved configurations, they differ in solving speed and complexity. While a cuber competing in the Fewest Moves Count Event will prefer the algorithm that solves the cube in as few moves as possible, a speedcuber will choose the fastest one.

Similarly, in computer science, algorithms are ranked relative to how their runtime or space requirements grow with input size. For smaller problems, the additional computing time required by an inelegant solution like brute force might be a better choice than spending more time coming up with a more intelligent approach. Efficiency increases usually entail increases in complexity, bugs, and error.

When choosing an algorithm, the programmer needs to consider the complexity of the task, the difficulty of implementing the algorithm, time restrictions, and the availability of computational resources.

Conclusion

In this article, we drew parallels between solving a Rubik’s Cube and computer programming. We explained the similarities between the two, and by analogizing between the specifics of PLL algorithms and programming concepts, we explained some of the fundamental ideas behind computer science and programming.

If you want to learn more about the technical concepts covered in this article and dive into practical coding exercises, check out our Introduction to Programming course.