Lesson 1

Deploying a Hadoop Cluster

Course

Deploy your own Hadoop cluster to crunch some big data!

Deploy your own Hadoop cluster to crunch some big data!

Last Updated March 7, 2022

Prerequisites:

No experience required

Course Lessons

Lesson 2

Problem Set: StackExchange Posts

Lesson 3

Deploying a Hadoop cluster with Ambari

Lesson 4

Problem Set: Reddit comments

Lesson 5

On-demand Hadoop clusters

Lesson 6

Project Prep

Taught By The Best

Mat Leonard

Content Developer

Mat is a former physicist, research neuroscientist, and data scientist. He did his PhD and Postdoctoral Fellowship at the University of California, Berkeley.

The Udacity Difference

Combine technology training for employees with industry experts, mentors, and projects, for critical thinking that pushes innovation. Our proven upskilling system goes after success—relentlessly.

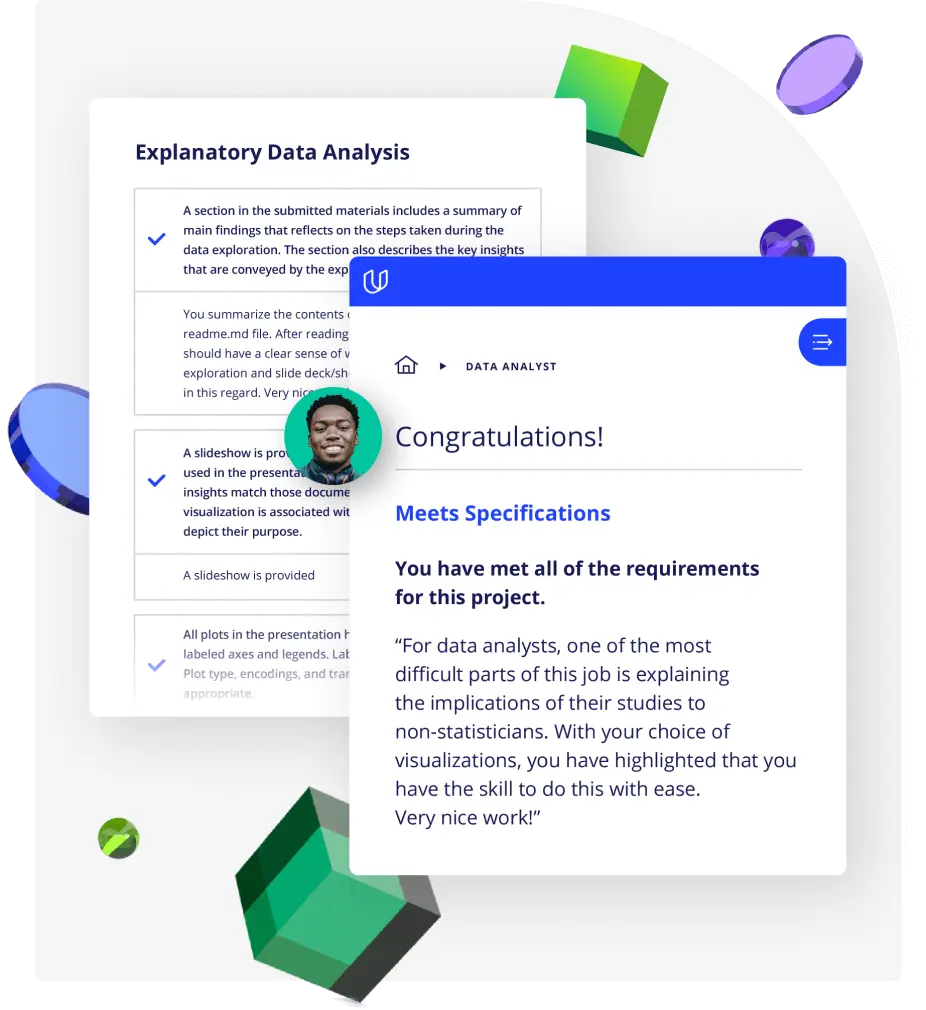

Demonstrate proficiency with practical projects

Projects are based on real-world scenarios and challenges, allowing you to apply the skills you learn to practical situations, while giving you real hands-on experience.

Gain proven experience

Retain knowledge longer

Apply new skills immediately

Top-tier services to ensure learner success

Reviewers provide timely and constructive feedback on your project submissions, highlighting areas of improvement and offering practical tips to enhance your work.

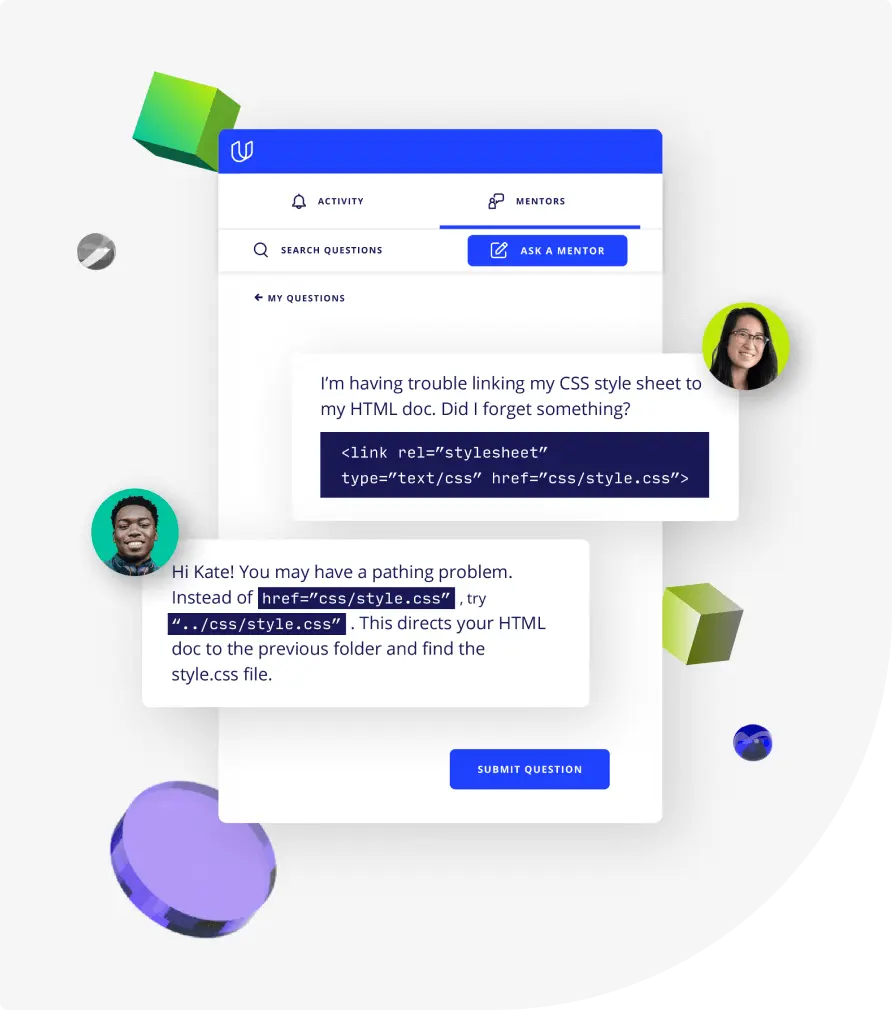

Get help from subject matter experts

Learn industry best practices

Gain valuable insights and improve your skills

Related Programs

Related Programs

8 hours

5 hours

4 weeks

, Intermediate

4 weeks

, Intermediate

23 hours

4 weeks

, Intermediate

4 weeks

, Intermediate

Beginner

4 weeks

, Advanced

6 hours

12 hours