Lesson 1

Introduction to Probabilistic Models

Welcome to Fundamentals of Probabilistic Graphical Models. In this lesson, we will cover the course overview, prerequisites, and do a brief introduction to probability.

Course

Learn to use Bayes Nets to represent complex probability distributions, and algorithms for sampling from those distributions. Then learn the algorithms used to train, predict, and evaluate Hidden Markov Models for pattern recognition. HMMs have been used for gesture recognition in computer vision, gene sequence identification in bioinformatics, speech generation & part of speech tagging in natural language processing, and more.

Learn to use Bayes Nets to represent complex probability distributions, and algorithms for sampling from those distributions. Then learn the algorithms used to train, predict, and evaluate Hidden Markov Models for pattern recognition. HMMs have been used for gesture recognition in computer vision, gene sequence identification in bioinformatics, speech generation & part of speech tagging in natural language processing, and more.

Advanced

3 weeks

Real-world Projects

Completion Certificate

Last Updated June 19, 2024

Skills you'll learn:

Prerequisites:

Lesson 1

Welcome to Fundamentals of Probabilistic Graphical Models. In this lesson, we will cover the course overview, prerequisites, and do a brief introduction to probability.

Lesson 2

Sebastian Thrun briefly reviews basic probability theory including discrete distributions, independence, joint probabilities, and conditional distributions to model uncertainty in the real world.

Lesson 3

In this section, you'll learn how to build a spam email classifier using the naive Bayes algorithm.

Lesson 4

Sebastian explains using Bayes Nets as a compact graphical model to encode probability distributions for efficient analysis.

Lesson 5

Sebastian explains probabilistic inference using Bayes Nets, i.e. how to use evidence to calculate probabilities from the network.

Lesson 6

Learn Hidden Markov Models, and apply them to part-of-speech tagging, a very popular problem in Natural Language Processing.

Lesson 7

Thad explains the Dynamic Time Warping technique for working with time-series data.

Lesson 8 • Project

In this project, you'll build a hidden Markov model for part of speech tagging with a universal tagset.

Founder and Executive Chairman, Udacity

As the Founder and Chairman of Udacity, Sebastian's mission is to democratize education by providing lifelong learning to millions of students worldwide. He is also the founder of Google X, where he led projects including the Self-Driving Car, Google Glass, and more.

Professor of Computer Science, Georgia Tech

Thad Starner is the director of the Contextual Computing Group (CCG) at Georgia Tech and is also the longest-serving Technical Lead/Manager on Google's Glass project.

Combine technology training for employees with industry experts, mentors, and projects, for critical thinking that pushes innovation. Our proven upskilling system goes after success—relentlessly.

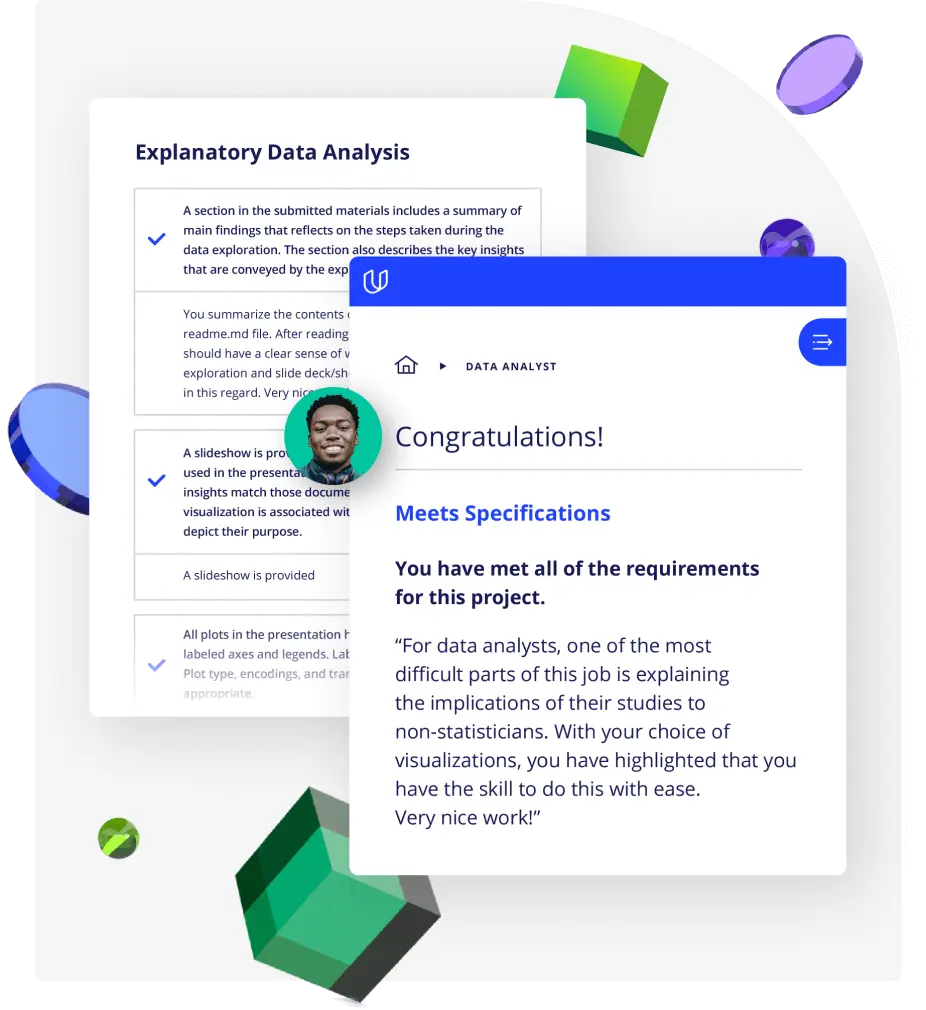

Demonstrate proficiency with practical projects

Projects are based on real-world scenarios and challenges, allowing you to apply the skills you learn to practical situations, while giving you real hands-on experience.

Gain proven experience

Retain knowledge longer

Apply new skills immediately

Top-tier services to ensure learner success

Reviewers provide timely and constructive feedback on your project submissions, highlighting areas of improvement and offering practical tips to enhance your work.

Get help from subject matter experts

Learn industry best practices

Gain valuable insights and improve your skills

Full Catalog Access

One subscription opens up this course and our entire catalog of projects and skills.

Average time to complete a Nanodegree program

3 weeks

, Advanced

4 weeks

, Advanced

4 weeks

, Intermediate

4 weeks

, Beginner

4 weeks

, Intermediate

4 weeks

, Advanced

4 months

, Intermediate

(275)

2 months

, Advanced

Beginner

4 weeks

, Advanced

1 month

, Beginner

4 weeks

, Beginner

4 weeks

, Advanced

4 weeks

, Intermediate

4 weeks

, Intermediate

(450)

3 months

, Advanced

Fundamentals of Probabilistic Graphical Models

3 weeks

, Advanced

4 weeks

, Advanced

4 weeks

, Intermediate

4 weeks

, Beginner

4 weeks

, Intermediate

4 weeks

, Advanced

4 months

, Intermediate

(275)

2 months

, Advanced

Beginner

4 weeks

, Advanced

1 month

, Beginner

4 weeks

, Beginner

4 weeks

, Advanced

4 weeks

, Intermediate

4 weeks

, Intermediate

(450)

3 months

, Advanced