You might not always know it, but Deep Learning is everywhere. Thanks to an advance in resources, both computationally and data-wise, the past years have seen an explosion in applications based on this Machine Learning technique. In this article, we introduce you to TensorFlow, Google‘s popular Deep Learning framework.

Some knowledge of Python and the basics of Machine Learning will help to understand the examples. If you feel that your Python foundations are still a bit shaky, you might want to check out our Introduction to Programming. Are you ready? Let‘s get started.

What Is Deep Learning?

Deep Learning is a type of Machine Learning. The models in this field are conceptualized as graphs that consist of interconnected layers. Most of these layers are “hidden,” meaning that we cannot directly observe their in- and output.

In such a graph, information is transported and transformed by mimicking the neuronal processes in the brain. It is for this reason that these deep models are referred to as artificial neural networks.

What Is TensorFlow?

TensorFlow is Google‘s open-source library for Deep Learning. It was first released in 2015 and provides stable APIs in both Python and C. When building a TensorFlow model, you start out by defining the graph with all its layers, nodes, and variable placeholders.

TensorBoard, the framework’s visualization feature, allows you to investigate and debug your graph. In the next step, you compile the graph and fit the model by running the data through it – this is what the “Flow“ part in TensorFlow‘s name refers to. And what about tensors? Well, a tensor is simply a high-dimensional datagram.

Imagine a matrix (or table), but with three, four, or 100 dimensions. Hard, right? Not for your computer.

TensorFlow’s Python interface is popular with Machine Learning professionals and enthusiasts as it’s easier to use than the C++ API.

Alternatives to TensorFlow

While TensorFlow quickly became the most frequently used Deep Learning framework, there have been several libraries before, such as Torch, Theano, or Caffe. A popular TensorFlow alternative is Keras.

It is written in Python and functions as a high-level API with TensorFlow as the default backend (formerly Theano). Many people who work with Deep Learning models appreciate Keras because it is easier to use than TensorFlow.

In Keras, you simply define your graph using readymade building blocks and get to work. On the downside, it’s less flexible than TensorFlow.

TensorFlow, Keras and TensorFlow Lite

The new TensorFlow 2.0, released in the fall of 2019, saw a lot of improvements regarding usability. This has lowered the barrier for beginners of Deep Learning who want to start out using TensorFlow directly with Python.

As a major change, a Keras interface is now integrated by default. This results in a nice combination of TensorFlow’s customizability and Keras’ ease of use. But once you have trained your deep model, how do you actually make it portable, so that many people can use it? TensorFlow Lite was made for that purpose.

It compresses your trained model and produces a file that can be stored on mobile devices to be used in offline mode, too.

TensorFlow Use Cases

For decades, it was a truism that computers are good at things that are hard for humans and vice versa. This is because computers were designed for dealing with structured data (like tables) which they could process in a fraction of the time that a human brain would need.

Deep neural networks change all that. Because of its multitude of interconnections, a neural net can find patterns in unstructured data and thereby approach, and sometimes even overtake, humans capacities. Let’s look at some examples of neural networks in action.

Image Recognition

For most people, the first step in their Deep Learning journey is the construction of a neural network that learns to classify images into one of several predefined categories (dubbed “the ‘Hello World’ of neural nets”).

The data can consist of handwritten digits or pictures of clothes. The practical applications of image recognition are endless.

Recently, we have seen neural networks that identify skin cancer by looking at pictures of moles, rivaling the performance of human experts. The networks commonly used for image recognition tasks are Convolutional Neural Nets (CNNs). They excel at classifying images by detecting local patterns and combining these into ever-larger contexts.

Machine Translation

Have you noticed how much better the Google translator has gotten over the past years? This is because, in 2016, Google made the transition from a statistical translation system to a neural network-based system. Machine translation and other applications of Natural Language Processing (NLP) are instances of sequence-to-sequence learning which is traditionally implemented as a Recurrent Neural Network (RNN).

Image-to-Image Translation With CycleGANs

If you manage to build a model for image-to-image translation, you can feed your network pictures of horses, which will turn into images of zebras.

While not exactly lifesaving, this application is based on a fascinating architecture: CycleGANs are adversarial networks that basically compete against each other to achieve a mapping from one feature distribution to another.

The cool part is that they do not need paired datasets but can infer the distributions on their own. CycleGANs are a great project for advanced learners. These networks are notoriously hard to build and train.

How to Use TensorFlow in Python

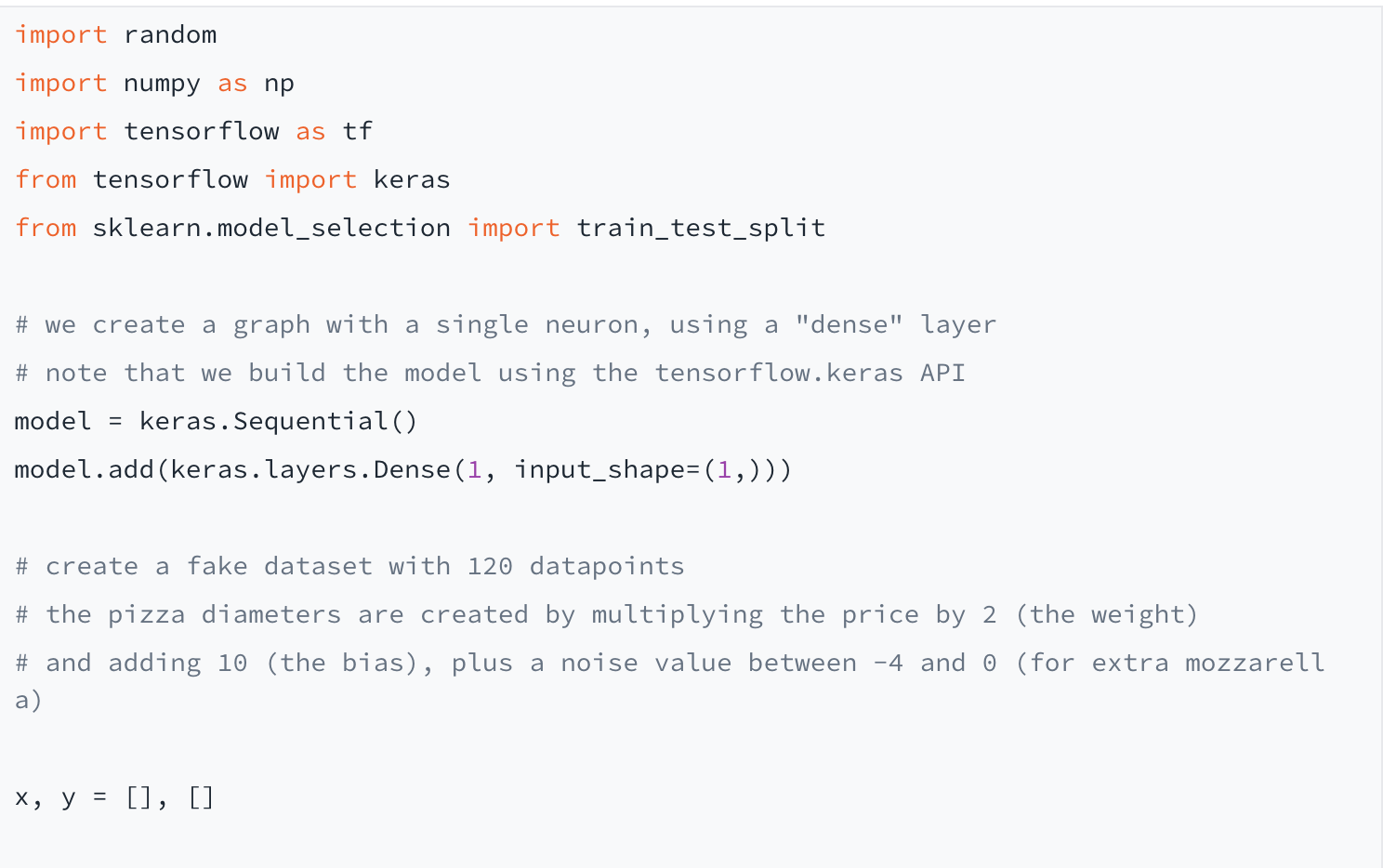

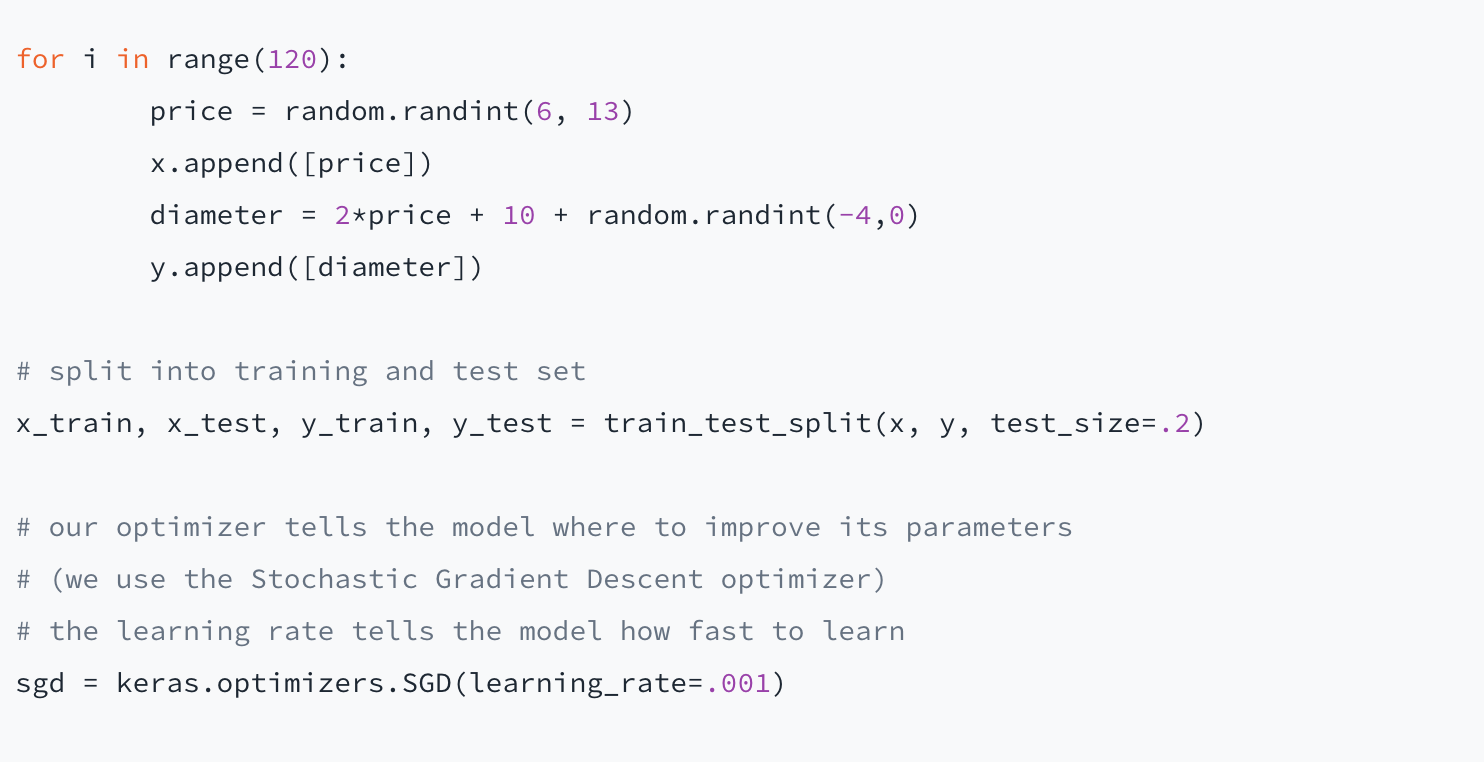

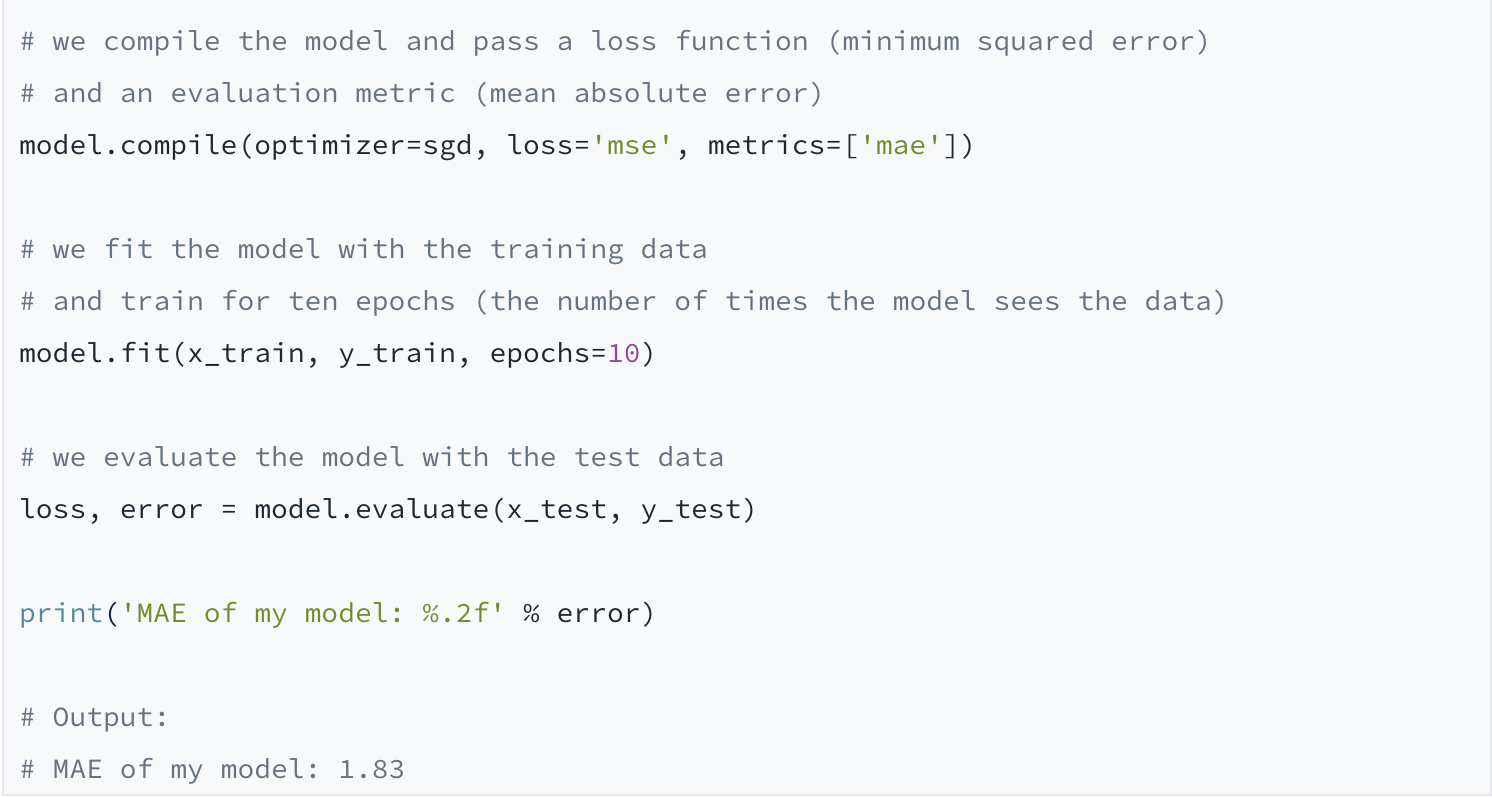

Deep Learning models can look complicated and intimidating at first. We will now look at a toy example in order to see the core concepts of TensorFlow in action using Python. In our example, we will predict the diameter of a pizza by looking at its price in dollars:

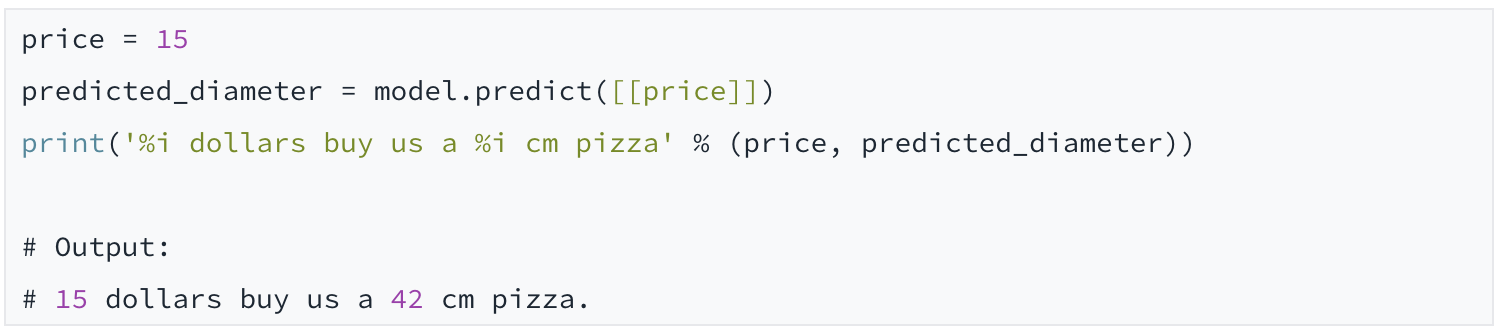

Given our input values, the model guessed the pizza’s diameter wrong by on average 1.8 centimeters. That is not bad — keep in mind that our model doesn’t even have any hidden layers. Now, let’s assume we just got paid $15 and want to celebrate by spending the money on pizza. How much pizza can we get for our money?

Sounds like a deal!

Additional Resources

For a visual introduction to neural nets, check out this video series by YouTuber 3blue1brown. The channel also has wonderful, visually motivated introductions to Linear Algebra and Calculus, which are essential for understanding the math behind Deep Learning architectures.

If you are interested in the (surprisingly rocky) history of neural networks, have a look at these articles. As for the practical applications of Deep Learning, you can read about skin cancer detection here and about unsupervised image translation using CycleGANs here. If you want to see neural machine translation in action, check out the DeepL translator.

Conclusion

In this article, we have learned about building and running neural network graphs in TensorFlow. We have encountered some interesting applications of neural networks and have built our first TensorFlow model with a simple one-node net.

Learn TensorFlow today