R might not be the first language that comes to mind when thinking about NLP. Short for “natural-language processing,” NLP is the discipline of making human language processable by computers. It is a growing field with thousands of applications, some of which you probably use in your daily life. Python has become the most popular language for researching and developing NLP applications, thanks in part to its readability, its vast machine learning ecosystem, and its APIs for deep-learning frameworks. However, R can be an equally good choice if you intend to quantify your language data for NLP purposes. Below, we list some of the most useful NLP libraries in R and walk through a simple NLP programming example.

What Is R?

The statistical programming language R was first released in 1995. In addition to being the initial letter of its authors’ names, Ross Ihaka and Robert Gentleman, the name is a play on that of the programming language S. R is mainly used for statistical analysis and for producing elegant graphs suitable for use in academic publications. It is therefore very popular among statisticians and other researchers who use it to quantify their data and build statistical models. While you can program in R using a standard text editor and R’s command-line interface, many people prefer the integrated development environment (IDE) RStudio. It shows your installed packages, a description of your variables in memory, and your plotted graphs, all at the same time.

The R NLP Ecosystem

Tidytext

One of the most difficult aspects of handling textual data is its inherently unstructured format. Typically, data science operates on structured, table-like data. Manipulating structured data is not only easier but also faster than working with free-form text or individual sentences. The tidytext package offers a partial solution to that problem by representing text as a table. It integrates smoothly with the other tidyverse packages for data manipulation in R like dplyr and ggplot2. Particularly useful is the unnest function, which transforms a text dataframe into a table that has one token per row. In this format, operations like stopword removal are easy to perform using basic table operations.

Word2Vec

Building a vocabulary model comes with many problems, e.g., homonyms (words that look and sound exactly the same but have different meanings), or words that weren’t part of your application’s training dataset (commonly referred to as “OOV”, or “out of vocabulary”). Many such problems are soluble by representing words as high-dimensional vectors known as word embeddings. The guiding principle behind word embeddings is understanding a word’s meaning by looking at it in context, very much in the tradition of linguist John Rupert Firth, who famously proclaimed, “You shall know a word by the company it keeps.” In recent years, the NLP community has come up with many different implementations of that idea. For instance, you can train a skip-gram model by predicting the surrounding context from the target word. In contrast, the continuous bag of words model (CBOW) predicts the target word using supplied context. R’s word2vec package lets you train your own word embeddings with either the CBOW or the skip-gram model.

Stringr

Stringr is R’s string manipulation library. It comes with handy functions to pad, trim, and switch the case of your text strings. More importantly, it implements several functions for pattern matching using regular expressions. These operations are fundamental to working with strings; they allow you to find patterns and manipulate them in various ways. Typically, a good regular expression (commonly abbreviated regex) is one that’s short and powerful. You might use one to find all occurrences of a phone number in your text or all the sentences phrased as questions.

OpenNLP

This package provides an interface to the Apache OpenNLP library, a machine-learning toolkit for the most common NLP operations: POS tagging, named entity recognition, and coreference resolution. OpenNLP comes with pretrained models for various European languages. It also allows you to train your own models. The library is written in Java, so you’ll need to make sure that you have rJava set up on your machine.

Quanteda

The Quanteda package assembles a collection of functions for handling text data. It lets you import your texts as dataframe objects from various file formats and convert them into three different data structures. The corpus data type can hold a text collection with various metadata; a document-feature matrix (dfm) represents text as a bag-of-words table; and the tokens() command turns your text into a list of character vectors. In addition, Quanteda comes with various handy functions — for example, the kwic (keywords in context) function, which lets you examine the contexts in which a word or word pattern appears.

Spacyr

spaCyr is an R wrapper for the popular spaCy Python package. spaCy has a modern feel and offers pretrained models for 16 languages. You can use the package for common NLP tasks like tokenization, lemmatization, dependency parsing, and named-entity recognition. For POS tagging, check out the TreeTagger available via the koRpus package interface.

Example of NLP with R

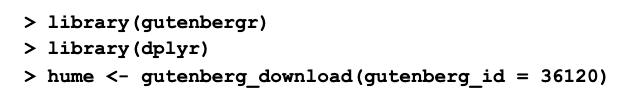

For this practical example of NLP with R in action we’ll use the packages gutenbergr and tidytext. The gutenbergr library offers functions for downloading from and organizing the open-source Gutenberg corpus, home to over 60,000 books.

For this example, we want to download a book by the philosopher David Hume with the ID 36120. In addition to the packages mentioned, we want to use the operator %>% (read “then”; from the dplyr package) which lets us pipe the output of one function into the next.

The gutenberg_download function loads the book in R’s tabular tibble format, with each line from the book being read in as one row.

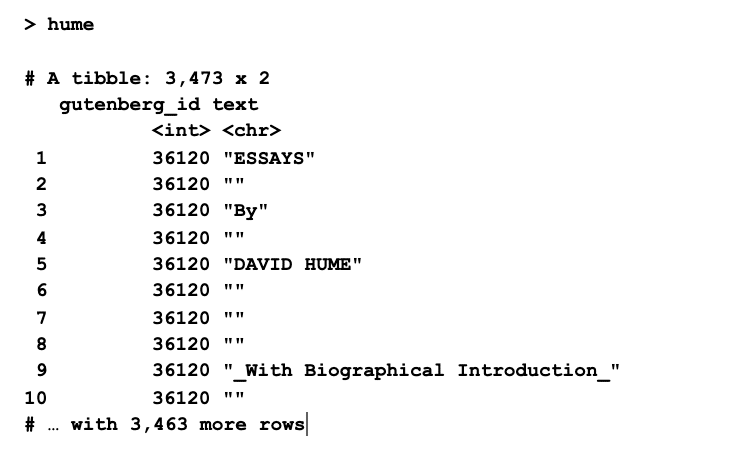

But for easier processing, we’d like to have one word per row. tidytext’s unnest_tokens() does that for us, in addition to removing punctuation and changing all letters to lowercase.

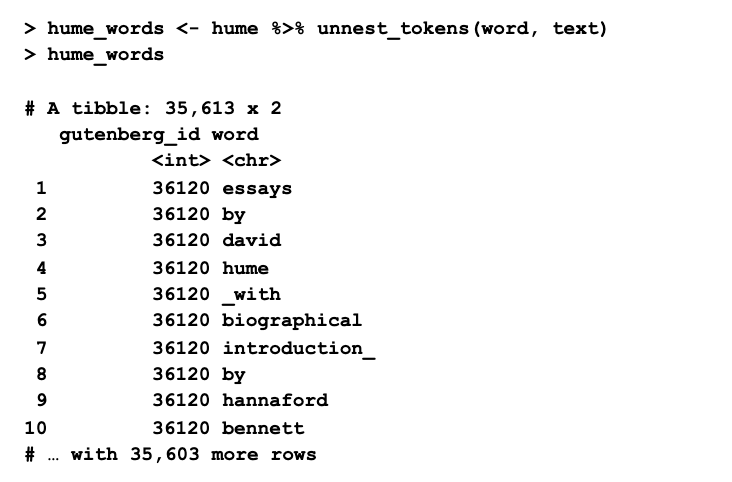

We can use the count function to find the most commonly used words in Hume’s book:

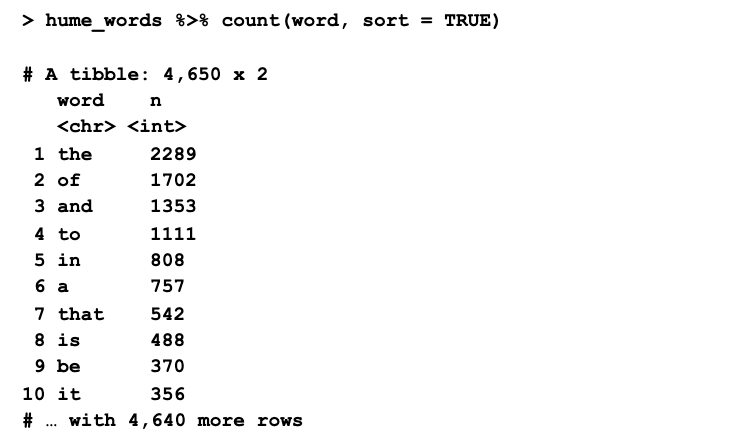

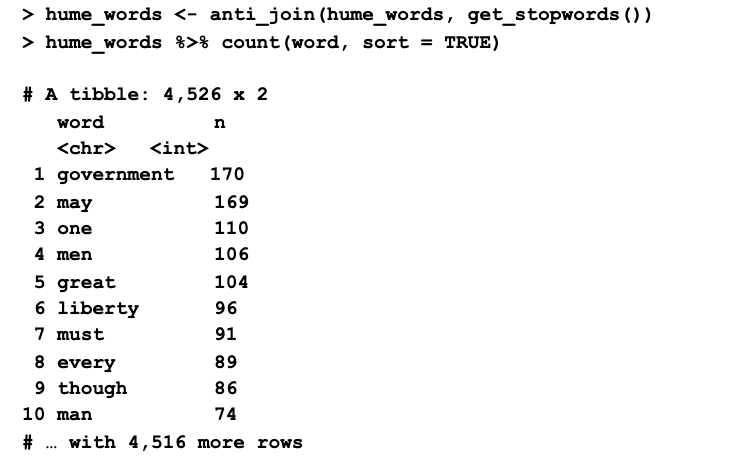

Unfortunately, these words don’t tell us much about the book. They could be from any text written in the English language. In a computational context, these function words are called stopwords. They are very frequent and carry little or no semantic value, which is why most NLP applications end up removing them. Thanks to the tibble format we chose, we can accomplish this elegantly by performing an anti_join on our corpus and the stopword table.

After removing the stopwords, we see that the most frequent words have become much more informative.

Summary

In this tutorial we showed you why, in the context of natural-language processing, R represents a viable alternative to better-known languages like Python or Java. It comes with many useful packages and lets you display your corpora in a clean and manageable table format. We also walked through a simple NLP example of finding the most common content words in David Hume’s essays using the tidytext package.

Want to learn more about NLP? Join our Nanodegree to become a regular expert in natural-language processing.