Learn what the Mars InSight lander is, how it works, and how the skills you learn in the Robotics Software Engineer Nanodegree program can be applied to a project just like this!

In the 1960s, NASA inspired people all over the world to pursue STEM education. The historic lunar landings marked the beginning of an incredible journey of innovation that continues today. NASA remains a pioneer in robotics technology, and this past weekend they reached another milestone when they launched the Mars InSight lander from California—the first interplanetary mission from the West Coast! (I attended the launch in person, and you can read about my experience here!) Despite the foggy day, the launch was an awe-inspiring event, and I am still marveling that humans were able to engineer this giant rocket to make this incredible journey.

Have you ever wanted to apply your skills to a robot that will inhabit another planet?

It’s not as far-fetched as it might sound, and NASA is showing us it can be done. And I can tell you that the skills you need are being taught right here at Udacity. This article covers what the Mars InSight lander is, how it works, and how the skills you learn in the Robotics Software Engineer Nanodegree program can be applied to a project like this!

InSight Mission

The Mars InSight lander is a robot that will travel to Mars. Once there, it will measure seismic activity, and help answer questions about the interior of Mars and how rocky planets like Earth were formed. There are a number of challenges that arise when you send a robot millions of miles away, and the success of the mission depends on how technology addresses those challenges.

The biggest issue is that there is a large delay in any kind of communication, and this restricts any kind of direct teleoperation of the system. Instead, you have to rely on commands that can be issued, but carried out with some form of autonomy. This is where techniques such as localization and path planning come into play, and their importance is exactly why we teach them in the Robotics Software Engineer Nanodegree program.

InSight does not need to navigate like a normal rover since it is a stationary lander, but it uses a robotic arm to place devices on the surface, and to do that successfully, you need to understand where it is, and where it is going.

Robotic Arm

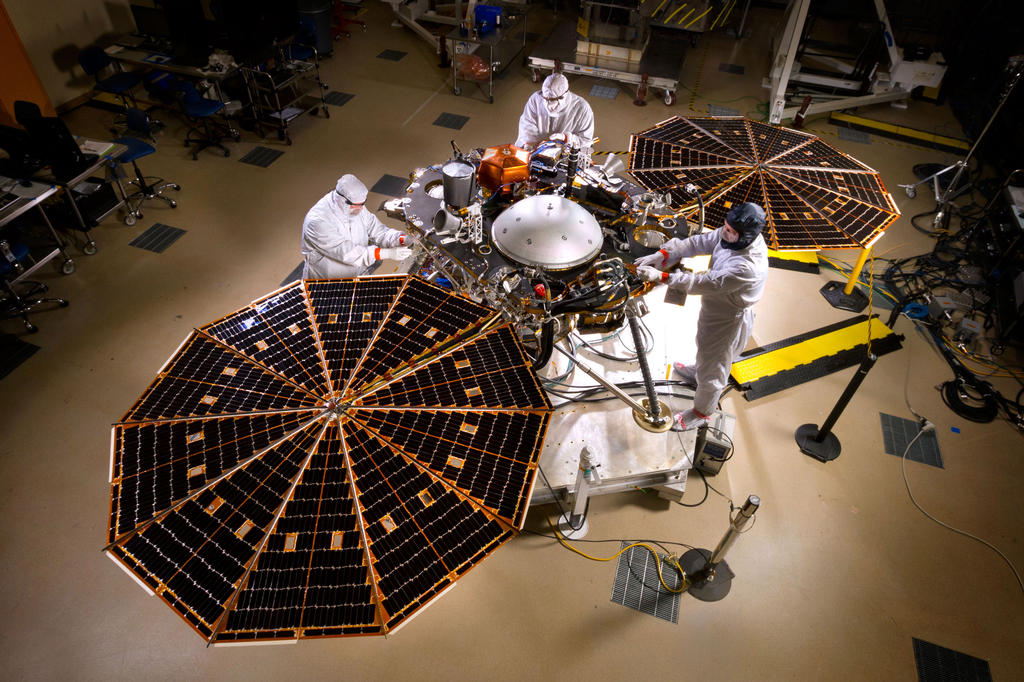

The InSight has a 3 DOF (degree of freedom) robotic arm that is mounted to the deck and utilizes four motors. Its purpose is to pick up the other tools and sensors that take measurements, and place them on the Martian surface. As previously noted, the system cannot be operated in real time from Earth, so if you issue a command to the arm, how do you know it will reach the objective? The answer is kinematics! Just as we teach in the Robotics Software Engineer Nanodegree program, using matrices that represent the movement of the robotic arm allow you to place the end effector exactly where it is meant to be. This is very important for handling sensitive tools.

In our program, we discuss how perception is an essential component of robotics. Without being able to derive updates from the world around you, a robot would be stuck performing a pre-programmed task in a static environment.

For InSight, the engineers used two cameras to help understand the exact area around the robot. The first uses a normal lens and is attached to the second section of the arm. The second is mounted under the deck and uses a fish-eye lens to get a 120° view of the operating area. Even when a human can verify the imagery data, and the task about to occur, the robot has to operate semi-autonomously to determine if there are any issues with the action about to be performed. In the Robotics Software Engineer Nanodegree program, you will have the chance to program your own perception algorithm in the third project of Term 1!

Seismometer

The first instrument that the robotic arm will be placing on the Martian surface is the seismometer. This is a very sensitive instrument that can determine extremely small vibrations in the planet. Obviously there is a lot of noise that will register with this type of sensor, and the engineers have attempted to minimize that by placing a wind and thermal shield over the device (this will be the second item the robotic arm places on the surface). The sensors are mounted at a 32.5° angle in order to minimize noise in a single axis. Note that I said sensors, plural! There are actually three identical sensor used in this device. By using multiple sensors aligned orthogonally to each other, the system can determine the location of the seismic activity.

The best designed sensors are only as good as the information we can derive from them. The ability to analyze and utilize data is a key skill in almost any robotics job. Imagine being able to tell what the core composition of Mars is, just by looking at the data that a robot—one you helped engineer and design—was able to measure! In robotics, the applications are almost limitless!

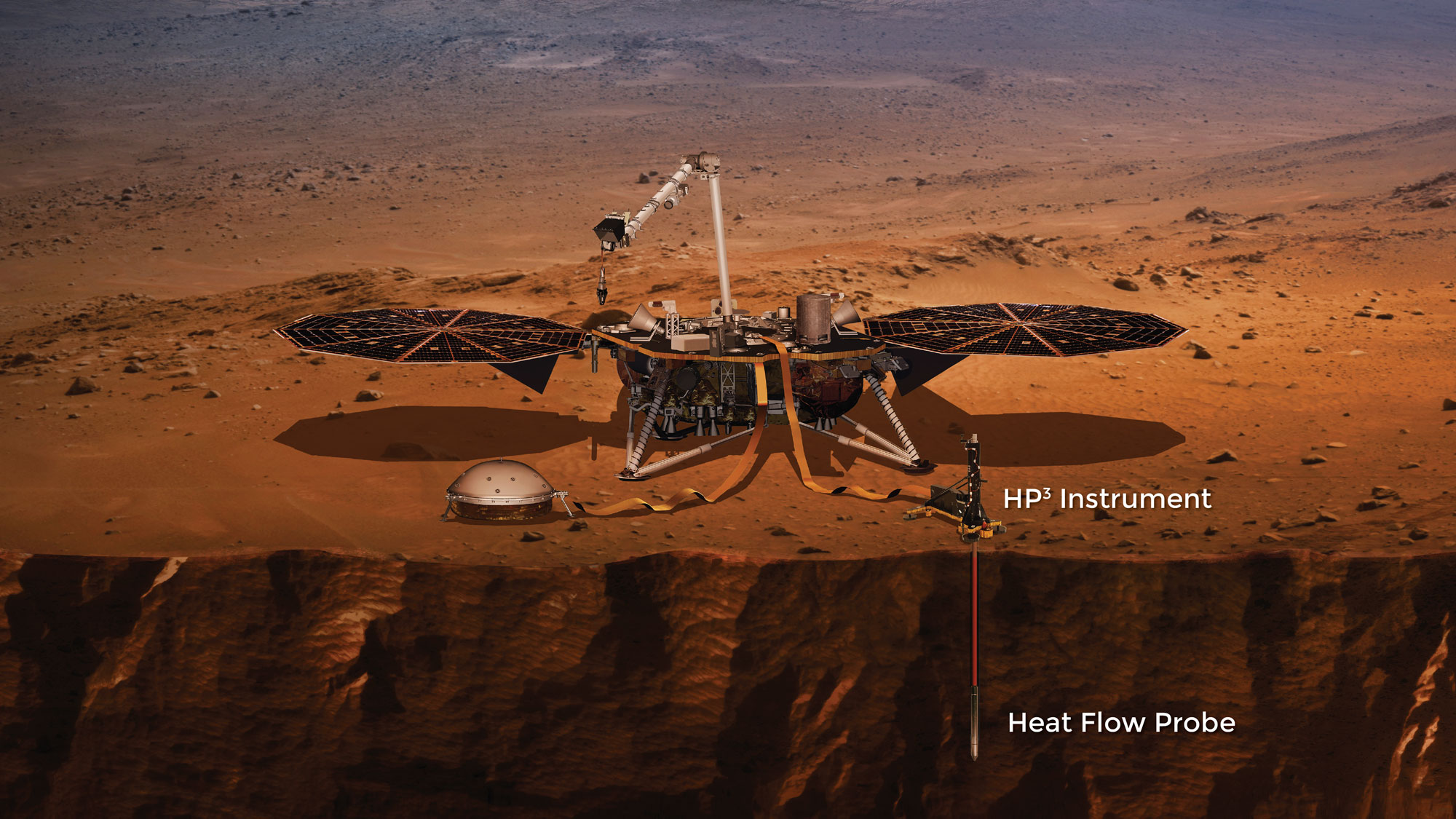

HP3

The Heat Flow and Physical Properties Probe (HP3) consists of two components, a self-hammering probe and a sensor equipped cable. This system is the third and final item that the robotic arm will place on the Martian surface. The cable has sensors down its entire length to measure variances in heat based on depth.

The self-hammering probe is a fascinating instrument. By combining the core engineering competencies used in robotics (electrical, mechanical, and software), this small probe is able to dig five meters into the Martian crust without outside assistance. It contains an electric motor that performs a kind of internal winding action. As the rotating mechanism is turned, a spring is loaded. When it makes one complete rotation, the spring is released and slammed forward pushing the probe deeper. It moves very slow (a few millimeters each time), but is an innovative way to get the job done in a small format. Understanding how to control a simple motor is one of the basics of robotics. At the beginning of Term 2, we discuss how you begin using hardware, and even show you how to get started with a simple circuit using the NVIDIA Jetson TX2.

There are people all across the globe just like me, who have a lifelong fascination with space travel, with robots, and with artificial intelligence. Are you one of them? If you are, then you know how incredible it is that in just a handful of decades, we’ve made reality out of what were once the wildest of dreams. When you enter the field of robotics, this is really what you’re doing—you’re making dreams come true, using technology.

Today, there are innovative examples of robotics everywhere, and our Robotics Software Engineer Nanodegree program is an ideal way to enter the world of robotics. If your dream is to help shape and create the world of tomorrow, then apply for this program today!

~

Mars InSight Lander images courtesy of NASA, per NASA Image Use Policy.

HP3 probe image courtesy of DLR