Each year, AI practitioners compete for the Loebner prize. This is an implementation of the Turing test where a computer‘s “humanness” is assessed by a panel of judges. The machine passes the test if it manages to convince the judges that it – and not its human competitor – is a real person. How does it do this? Simply by using language. Human-like conversation is but one of the many applications of Natural Language Processing, NLP for short.

Natural Language Processing is the discipline that makes language understandable for computers, so that they can work with it in a wide range of applications. While the definition of a natural language is fuzzy, in this context it simply means a language that humans use for communication.

In that sense, commonly spoken idioms like English and Yoruba belong to the class of natural languages, as well as a constructed language like Dothraki, or one that is no longer spoken, like ancient Greek. Human languages are characterized by complexity, ambiguity, and redundancy, all of which make Natural Language Processing a very hard problem.

Is NLP The Same as Machine Learning?

Machine learning describes a group of algorithms that learn their parameters implicitly from the input. While a large part of NLP is done with machine learning, it is important to note that rule-based systems are a part of natural language processing, too. The first chatbots, for example, worked with hard-coded templates (surprisingly, this was enough to convince some people of the computer’s humanness). Nevertheless, the vast majority of NLP applications have seen a dramatic improvement in performance through the use of machine learning. Deep learning in particular has advanced the manipulation of natural language data.

How Does Natural Language Processing Work?

Natural language processing is an umbrella term for a multitude of different algorithms, but there are some commonalities between them. Most applications incorporate a preprocessing pipeline to clean the language data, before feeding it to the algorithm. This could include stemming or lemmatizing the words (meaning that wordforms are mapped to a common denominator, e.g. “running” and “runs” would both be mapped to “run”), or the removal of commonly used function words (called “stopwords”) in certain models. On the other hand, the data could be enriched by adding part of speech information (which in itself requires an NLP application, if it isn‘t done manually). In deep learning contexts, words are often transformed into rich, high-dimensional vectors, called word embeddings.

Why Do We Need Natural Language Processing?

You know what they say, about the majority of our communication being non-verbal? Well… not on the internet. The ubiquity of the world wide web in our lives has led to an enormous growth in text-based data. NLP techniques help us make sense of that data. An algorithm can read one million pages of text and summarize them, while such a task would soon push the limits of the human brain.

In addition, NLP can help break down barriers in terms of accessibility. While voice assistants like Apple’s Siri or Amazon’s Alexa are a nifty feature for most people, they can be valuable assistants to persons with certain impairments. Likewise, machine translation, which includes text simplification tasks, improves people’s overall access to a wide range of documents.

What Are Some Applications of Natural Language Processing?

Speech Recognition

In order to talk to you, a voice assistant first needs to understand what you said. This involves transforming the continuous waveform produced by your voice into discrete meaningful units. From these, the machine reconstructs a representation of the underlying word sequence intended by the speaker. Speech recognition is used in a wide range of applications, like closed captioning, hearing aids, and dictation software.

Machine Translation

The translation of one natural language to another by means of a machine was, for a long time, a story of failures. It turned out that language was far more complex than imagined, and the output of translation systems was often mocked. Lately however, translation systems based on neural networks have produced truly impressive results. Rather than relying on any handwritten rules, these systems work with complicated deep learning architectures and massive collections of parallel data.

Sentiment Analysis

How do people feel about certain things? This is the question that sentiment analysis systems seek to answer. They assign a label or a continuous value to an input regarding its polarity. This can be a binary (how negative/positive is the statement?) or a more fine-grained scale. The most basic sentiment analyses simply count both the positive and the negative words in a text (specified in a dictionary) and compute a ratio. On the other end of the spectrum, more complex systems can take phenomena like negation or irony into account, too.

Spell Checking

Spell checkers have been around for a while. Here, the system can be simple and dictionary-based (using a distance metric to find out which word is the most likely target of a misspelling), or it can take context into account as well (in which case grammar correction is often involved). While there have been many funny stories about auto-correct gone wrong, some spell-checkers can be empowering tools for people with dyslexia.

Spam Filtering

If you have an email account, you have benefitted from a spam filter. This, too, can be dictionary-based (with lists for words that are highly indicative of spam). More commonly used is a Bayesian classifier that works with word probabilities.

A Natural Language Processing Example in Python

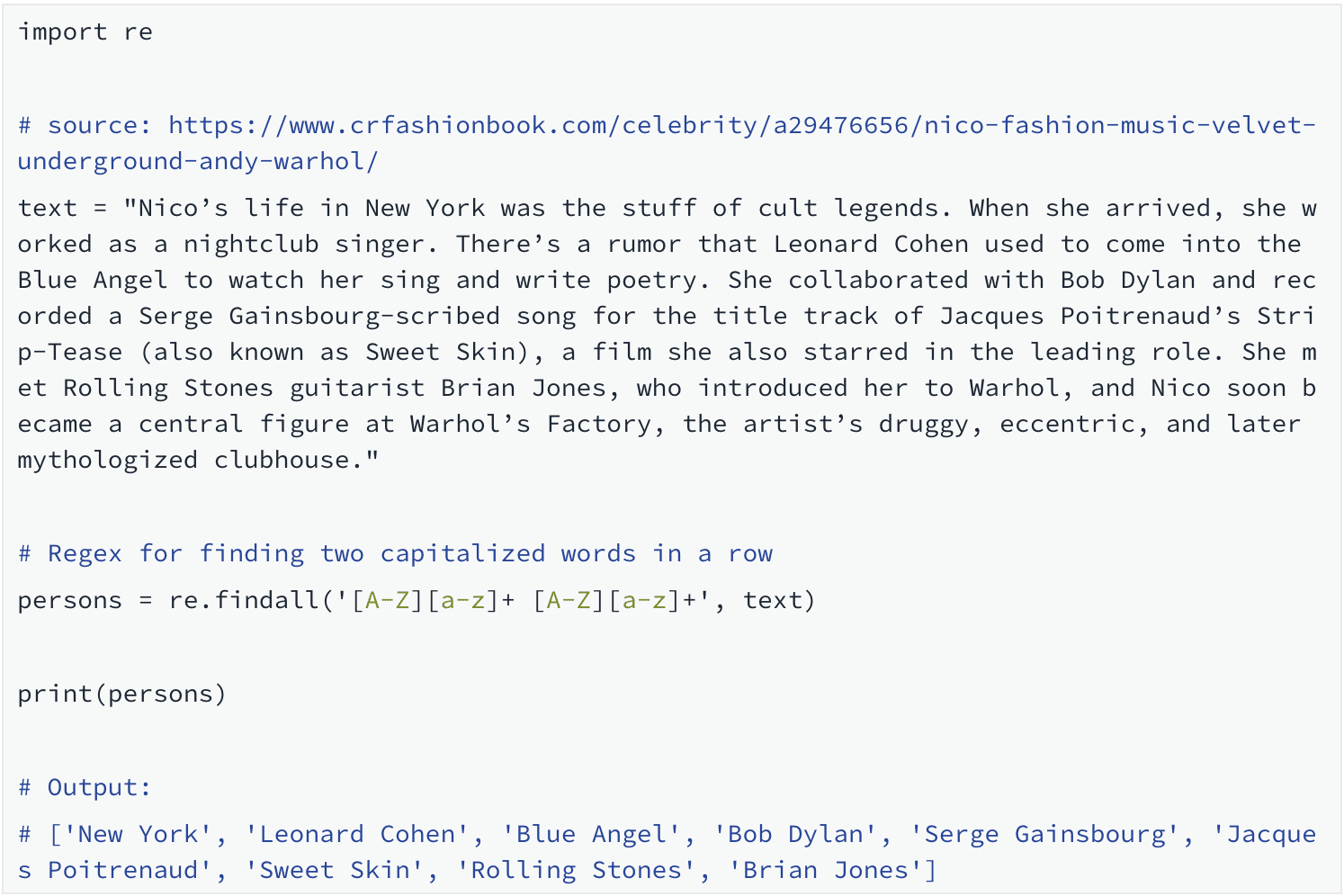

Let’s look at an example of Natural Language Processing in action, using Python. In this example, we will do named entity recognition. Specifically, we want to find the persons mentioned in a text. How should we go about it? We will try a very naive method at first. With the help of regular expressions, we will look for all instances of two capitalized words in a row. A regular expression, or regex for short, is an automaton that finds certain patterns in a string.

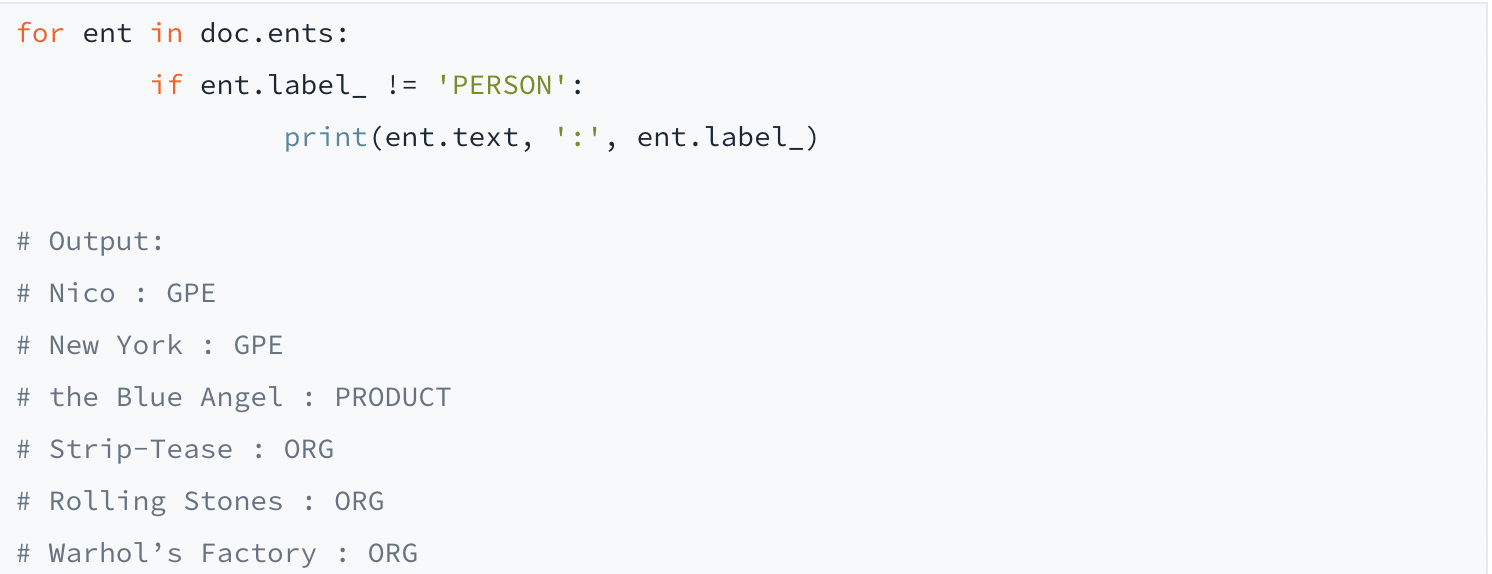

As we can see from the output, our naive approach found some of the persons in the text, while omitting others (like Nico) and including entities that are not persons at all, like New York or the Rolling Stones. Let’s use a more sophisticated method to achieve our goal of extracting only the persons from the text. This time, we are going to work with the popular NLP library spaCy.

spaCy’s named entity recognition is better than our simplistic approach. But why does the name Nico show up only once, when it is mentioned twice in the text? Let’s look at how spaCy categorized the other entities.

SpaCy interpreted the first instance of “Nico” as a geopolitical entity (GPE). The reason why it assigns different labels to the same string in one text is that it does not work deterministically like our regex-based solution, but probabilistically, taking the context into account.

Summary

In this article, we have learned about the key features of Natural Language Processing. We have encountered some of the most common NLP applications. Finally, we implemented a simple Natural Language Processing example. Using regular expressions and the spaCy library, we identified the mentions of persons in a text.

Start learning today.

Sign up for our Nanodegree in NLP today!